Browser Web Scraping - Scrape Data With Chrome Developer Tools (Chrome DevTools)

Scraping data using vanilla JavaScript with the Chrome Developer tools has never been easier! When you think of web scraping, you often think of some fancy Python script using a library such as Scrapy or BeautifulSoup or a flashy NodeJS application using Axios. What if I told you that you didn't need any of that? Everything you need to scrape data from a web page or website is backed directly into the Chrome browser. In this example, I am going to show you how easy it is to implement scraping data with Chrome developer tools. First, let's cover some basics about the Chrome Developer Tools (DevTools).

What are the Chrome Developer Tools (DevTools)?

Google Chrome provides a suite of tools specifically for developers called the Chrome DevTools. The Chrome DevTools empowers developers to diagnose web issues, inspect and manipulate the Document Object Model (DOM), diagnose web issues, and monitor website performance and networking. These tools are designed to assist developers on both the front-end and back-end of the web development process.

What are the Advantages & Benefits of Chrome DevTools?

The primary advantage of using the Chrome DevTools is that it's already built into the Google Chrome browser. The Chrome Developer Tools' benefits include seeing and modifying rendered HTML, CSS, and JavaScript and monitoring network activity in real-time. It lets developers quickly diagnose and modify issues within the Document Object Model (DOM) and networking.

What are the Chrome Developer Tools Designed For?

The Chrome DevTools are designed to assist web developers and programmers in inspecting and modifying rendered HTML, CSS, and JavaScript to streamline the development process and respond to programming and network issues.

What Languages Do DevTools Use?

The Google Chrome DevTools allows developers to interact with Document Object Model (DOM) elements, including HTML, CSS, and JavaScript. The Browser DevTools are built to interact with HTML, CSS, and JavaScript elements, empowering web developers and programmers to increase development speed and allowing for efficient web troubleshooting.

How to Open Google DevTools

The most basic way to open the Google Chrome DevTools is by clicking the menu button on the far right of the Chrome address bar and then selecting More Tools > Developer Tools.

The Chrome DevTools, universal hotkey F12, opens the last opened panel.

The following sections provide additional keyboard shortcuts for opening the Chrome DevTools

What are the Shortcuts for DevTools on Windows?

The keyboard shortcut for opening the Chrome DevTools elements tab on Windows is Ctrl + Shift + C. Additionally, you can jump directly to the JavaScript console from the keyboard by hitting Ctrl + Shift + J.

What are Shortcuts for DevTools on Linux?

The keyboard shortcut for opening the Chrome DevTools elements tab on Linux is Ctrl + Shift + C. Additionally, you can jump directly to the JavaScript console from the keyboard by hitting Ctrl + Shift + J.

What is the Shortcut for DevTools on Mac?

The keyboard shortcut for opening the Chrome DevTools elements tab on MacOS is Cmd + Option + C. Additionally, you can jump directly to the JavaScript console from the keyboard by hitting Cmd + Option + J.

What is Browser Web Scraping?

Browser web scraping is extracting data from websites for various purposes, such as research, analysis, or automation, using the built-in browser developer tools. This Browser Web Scraping technique sends only a few requests through direct actions by the user. Other web scrapers will typically send many requests asynchronously through scraping automation to the web server. It is important to note that many websites strictly forbid scraping through their robots.txt file. This demo uses a hybrid scraping technique that relies on users to request pages directly in the browser. This protects web servers from request flooding. It is essential to exercise good manners, and proper request hygiene is a polite way to interact with web resources.

Scrape Data Using JavaScript with Chrome Developer Tools (DevTools)

In this example, we will scrape new NPM package names from Libraries.io which tracks open-source packages. To start, open your Chrome browser and navigate to the following website. To extract data from the website, open the Chrome Developer Tools (DevTools) using the keyboard shortcut Ctrl + Shift + C on Windows/Linux or Cmd + Option + C on MacOS, which will open the DevTools Elements tab.

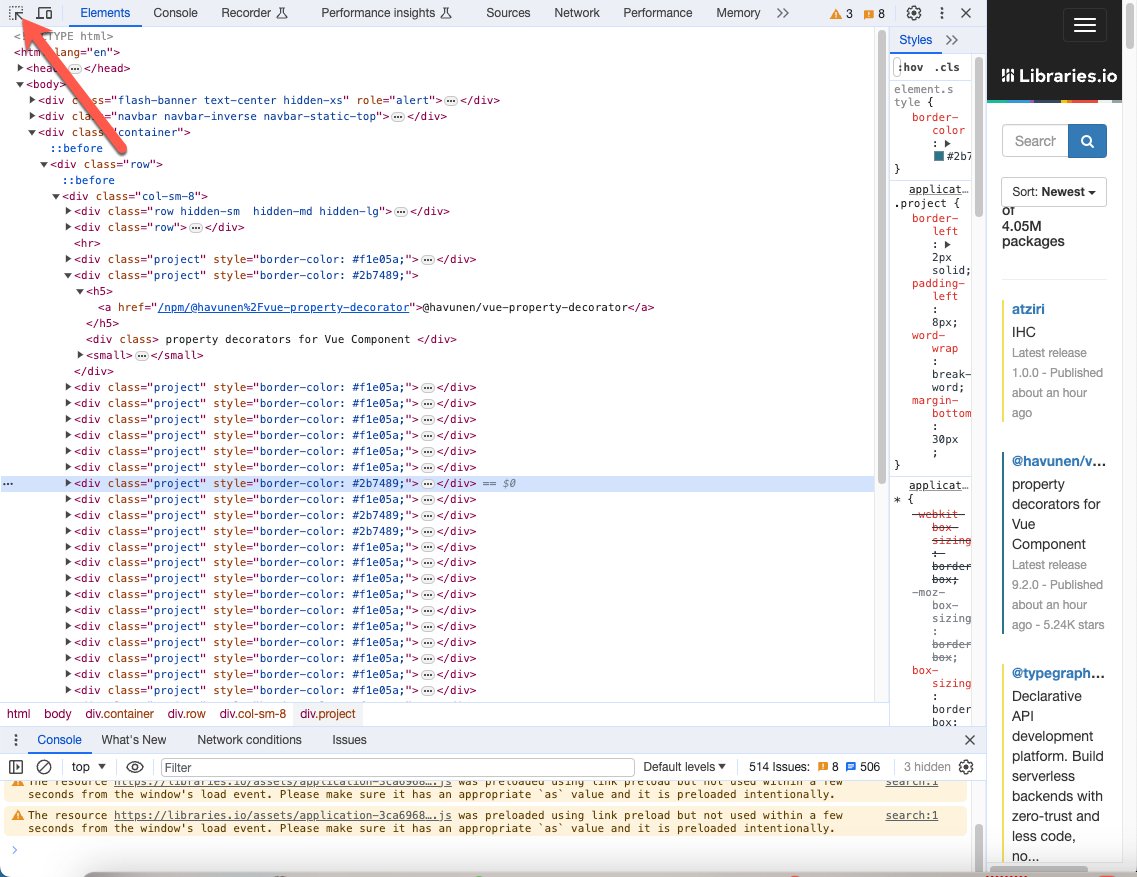

Select Website Tag for Web Scraping

First, we need to understand the HTML DOM we are interacting with. In this demo, we will use the path provided by the anchor HTML tag as an input to the JavaScript function we will write. This scrape function will grab and store data from a website. In the DevTools console, we can select the Elements pane and click on the Selector tool, allowing us to highlight the HTML code we want to copy.

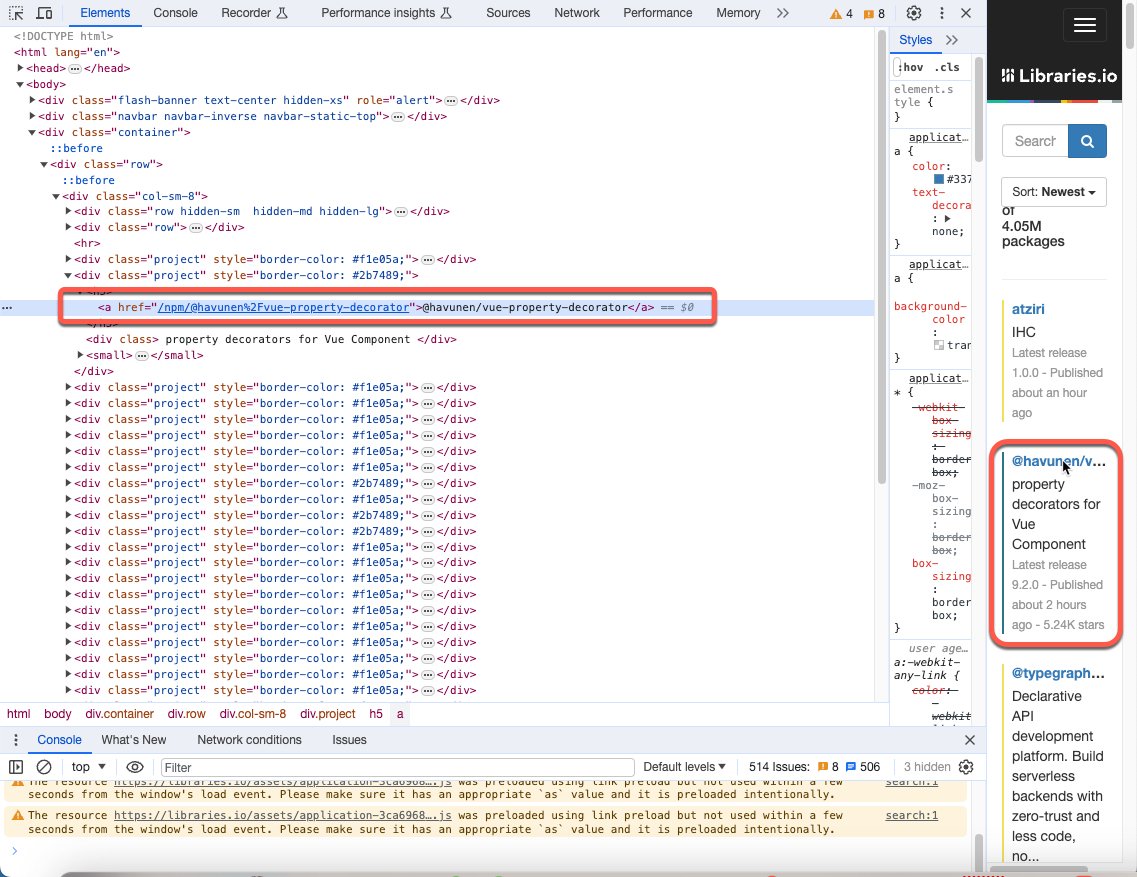

Once we have the inspect tool and its selector, move the cursor to a package name and click on the hyperlink. This will highlight the HTML code in the DevTools elements tab.

Within this HTML element we can see that the anchor tags href attribute refers to a path to a package, we will use the /path value without the package name as input for our scrape function in order to filter anchor tags.

Extract Data With JavaScript Using Chrome Developer Tools (DevTools)

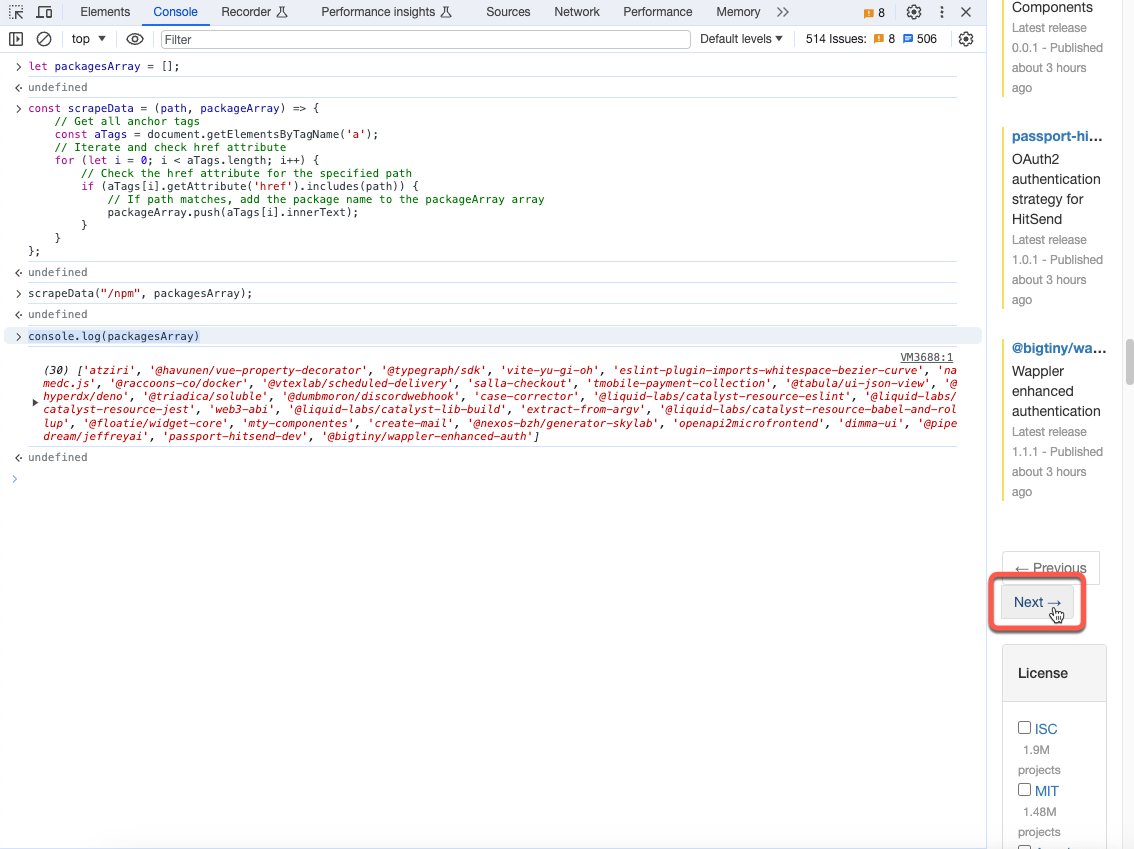

Next, we can navigate back to the JavaScript console, where we can begin writing our scrape function, which will serve as our tool for web scraping.

Let's begin by coding JavaScript (JS) by defining an array that will hold our package names.

Next, let's write our scrape function containing the path we selected /npm and our packagesArray as function inputs.

In the above function, we declare a const variable to store all anchor tags on the page with the document.getElementsByTagName('a') command. Next, we write an iterator (for loop) to find anchor tags, including our path to isolate interesting anchor tags. A simple if statement on the HTML attributes condition that includes our path will target the necessary data for scraping. Once our targeted anchor tags have been isolated for web scraping, we can push innerText (package name) into our packageArray.

We can call our JavaScript scraper function to parse the packages and scrape the web data.

We can confirm our web scraping operation was successful by running the following command, which will print our the packagesArray variable to the console.

You may wonder why we didn't declare the packagesArray inside our JavaScript function. Let's explore why and a powerful scraping hack in the next section.

Extract Data From Websites Through Copy() To Clipboard

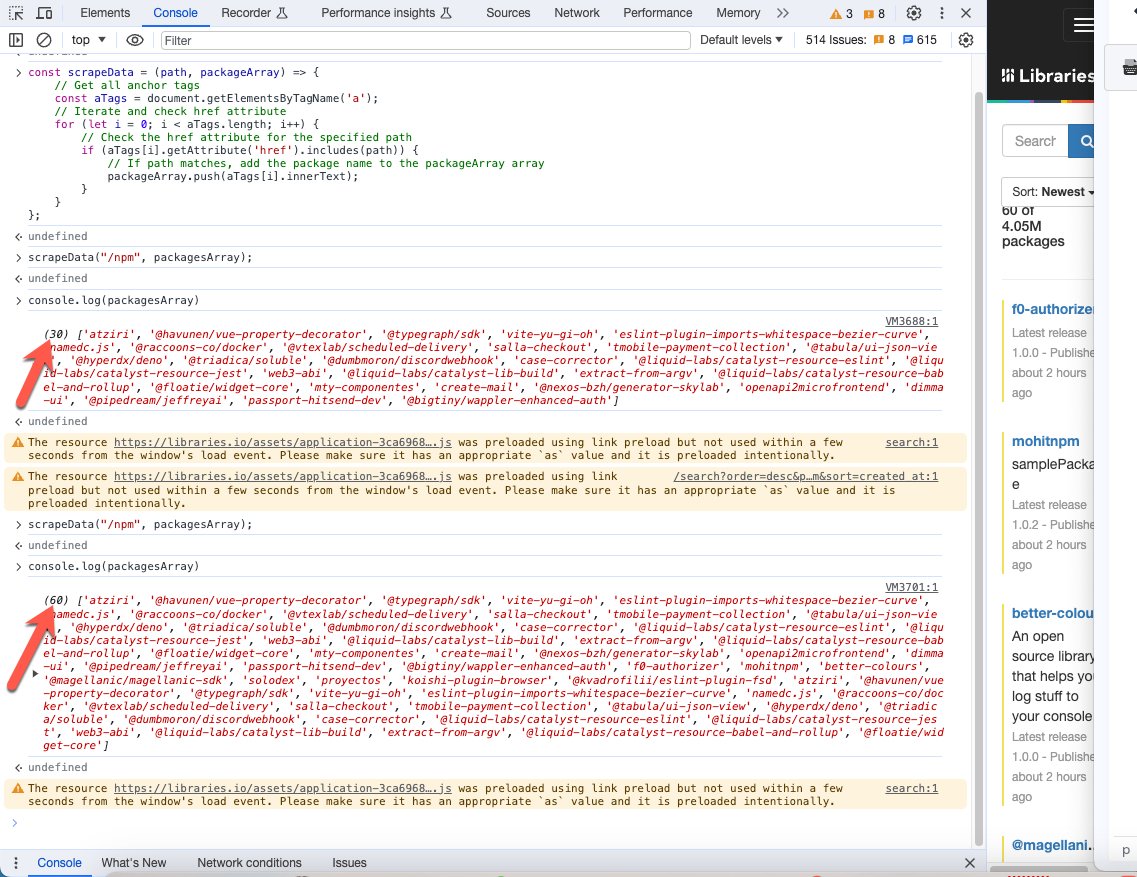

The principal reason we passed the packagesArray as a function is that by doing so we can dynamically populate the array from page to page simply by navigating down the packages list tree and re-running our function on each subsequent page.

Press the following button to send the network request to navigate the browser to the next page of packages. Once we verify we are on the next page, re-run our scrape data function.

Re-running our scrape function on different pages grows our array

Once we console log the packagesArray we can see that our array of packages has grown from 30 to 60. We could keep clicking next in the browser, sending the request to each new page, and grow our array indefinitely.

Once we are satisfied, we can use the DevTools copy method to copy our array to our clipboard. After which we can paste the scraped data into an external file on our file system.

The copy() browser method is powerful because it allows developers and programmers to copy variables and other forms of data directly from the JavaScript console to the clipboard and then onto the filesystem.

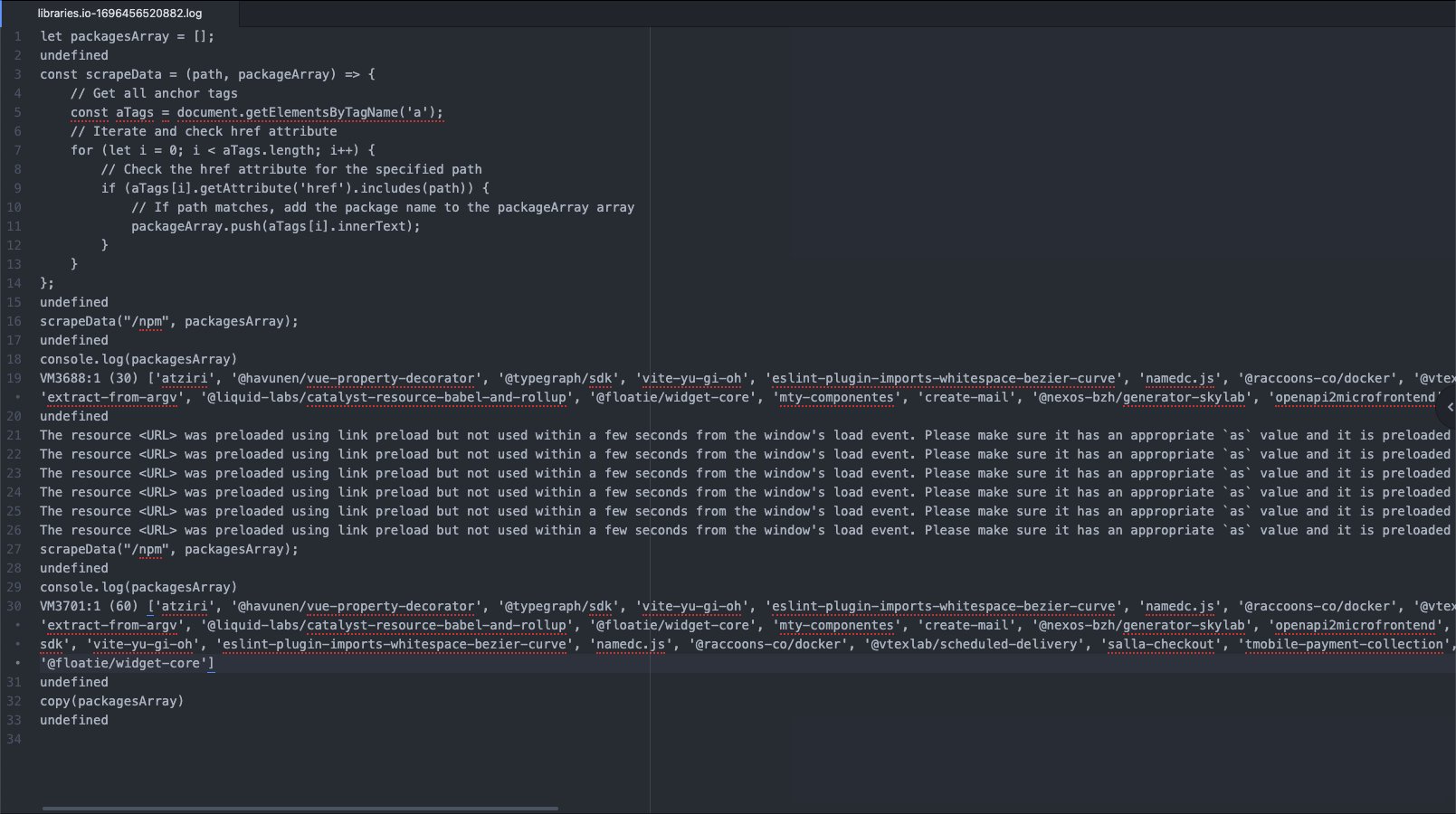

Scraping Data to A File

If you'd prefer to save scraped data to a file, right-click the JavaScript browser console and select save, which will save the entire console data to a file on your filesystem.

This useful devtools feature which allows developers to save console output to a file is useful as it literally saves everything from the console. Coders could use this feature to troubleshoot objects, save functions for later, test locally, store for logging, etc.

Conclusion: Scraping Data With Chrome Developer Tools (DevTools)

In this tutorial, I showed you a powerful way to extract data with Chrome Developer Tools through Browser Web Scraping. This scraping method represents a balanced and considerate approach to extracting data from websites. Unlike traditional web scraping methods that bombard servers with numerous requests, browser web scraping relies on users' direct actions to send network requests and then extract the web data This method not only ensures a more respectful interaction with web servers but also aligns with ethical scraping practices.

We also looked at the Google Chrome Developer Tools at a high level, its primary uses in aiding web developers and programmers, handy DevTool shortcuts, selecting HTML elements with the inspector tool, and writing JavaScript code for data scraping in the browser. We also demonstrated the built-in DevTool copy command, which becomes a powerful hack as a tool for scraping.

This project is also available on GitHub. If you have any questions, feel free to connect on social media and happy scraping!